シェア:

Chris is the Staff PHP Developer Advocate and Server SDK Initiative Lead. He has been programming for more than 15 years across various languages and types of projects from client work all the way up to big-data, large scale systems. He lives in Ohio, spending his time with his family and playing video and TTRPG games.

Step-by-Step: Adding Vonage APIs to Your AI Agent with MCP

Have you ever wanted to build your own AI chatbot that can do more than just answer questions? What if your chatbot could check your account balance, send messages on your behalf, or interact with other services? That's exactly what we'll learn to build today using Anthropic's Claude API and the new Vonage Model Context Protocol (MCP) API Bindings Server (aka: Tooling Server).

In this tutorial, we'll create a chatbot that can have conversations with you while also being able to use external tools - specifically, we'll integrate with the Vonage Tooling MCP Server to check account balances as an example.

TL;DR: You can find the complete working example in our GitHub repository.

Before we start, make sure you have:

Node.js installed (version 14 or higher)

Basic JavaScript knowledge

A Vonage API account (for the balance checking example)

To complete this tutorial, you will need a Vonage API account. If you don’t have one already, you can sign up today and start building with free credit. Once you have an account, you can find your API Key and API Secret at the top of the Vonage API Dashboard.

Claude is Anthropic's AI assistant that excels at natural language understanding and generation. The API allows you to integrate Claude's capabilities into your applications. Unlike some other AI APIs, Claude is particularly good at:

Following instructions precisely

Handling complex reasoning tasks

Working with tools and function calling

While we have talked about what an MCP server is in the past, an MCP is a standardized way to connect AI assistants to external data sources and tools. Think of it as a universal adapter that lets your AI assistant "talk to" other services like databases, APIs, or applications.

Instead of writing custom integrations for each service, MCP provides a standard interface that AI assistants can use to discover and interact with tools automatically.

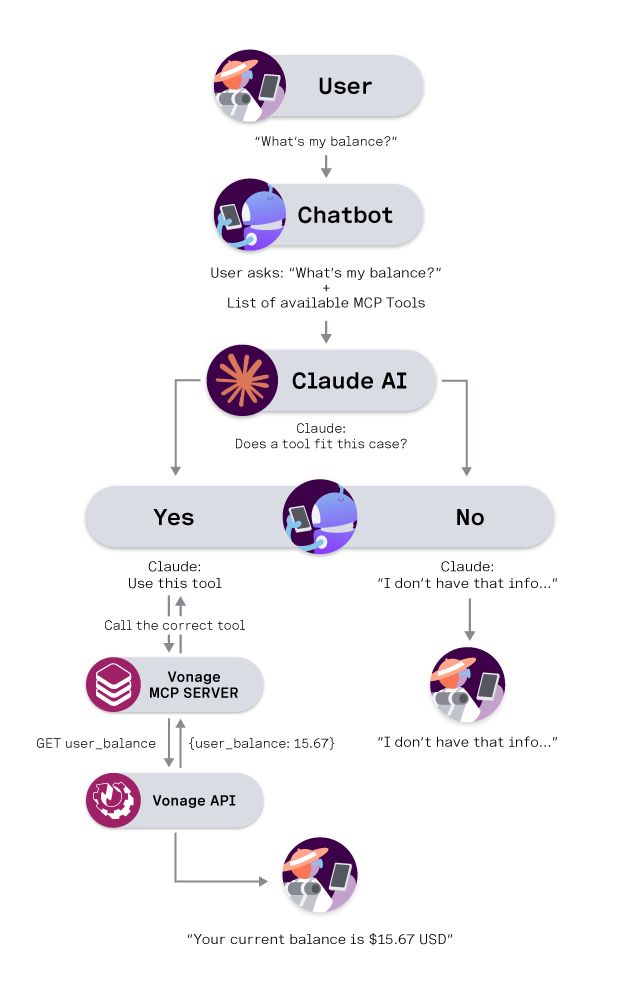

Before we start coding, let’s visualize how everything connects. In order to talk to Claude, your chatbot needs to orchestrate all the moving parts:

You (the user) type a message like: “Can you check my Vonage account balance?”

The chatbot sends that message, along with a list of available MCP tools, to Claude.

Claude (the AI) reads your message and decides whether a tool can help.

If so, it responds with a special tool_use instruction.

If not, it just answers directly.

The chatbot detects that tool-use request, runs the matching MCP tool (in this case, “balance”), and collects the result.

The MCP server calls the Vonage API behind the scenes to get your balance.

The result is returned to Claude, which then replies naturally: “Your current balance is $15.67 USD.”

Visual diagram showing how a user request moves through the chatbot, Claude AI, and the Vonage MCP Server to retrieve a real account balance using the Vonage API.

Visual diagram showing how a user request moves through the chatbot, Claude AI, and the Vonage MCP Server to retrieve a real account balance using the Vonage API.

Let's start by examining the structure of our chatbot. Here, I’ll explain the core components and functionality, but you’ll notice that some functions are omitted. Jump to the How to Run Your Chatbot section to implement the chatbot.

import Anthropic from '@anthropic-ai/sdk';

import { Client } from '@modelcontextprotocol/sdk/client/index.js';

import { StdioClientTransport } from '@modelcontextprotocol/sdk/client/stdio.js';

import readline from 'readline';

import { promisify } from 'util';

class ClaudeChatbot {

constructor() {

// Initialize the Anthropic API client

this.anthropic = new Anthropic({

apiKey: process.env.ANTHROPIC_API_KEY

});

// Set up command line interface

this.rl = readline.createInterface({

input: process.stdin,

output: process.stdout

});

this.question = promisify(this.rl.question).bind(this.rl);

this.conversationHistory = [];

// MCP-related properties

this.mcpClients = new Map();

this.availableTools = new Map();

this.model = 'claude-3-5-sonnet-20241022';

}

}

What's happening here?

We are creating a small class (ClaudeChatbot) to handle the chatbot code. It connects to the Claude API, sets up some command-line tools like readline to read user input, and then sets up a place to store all of the MCP servers that our code knows about.

The magic of our chatbot comes from its ability to connect to MCP servers. Here's how we do it:

async loadMcpServers() {

try {

const configPath = path.join(process.cwd(), 'mcp-config.json');

const configData = await fs.readFile(configPath, 'utf8');

const config = JSON.parse(configData);

console.log('📡 Connecting to MCP servers...');

for (const [serverName, serverConfig] of Object.entries(config.mcpServers)) {

await this.connectMcpServer(serverName, serverConfig);

}

console.log(`✅ Connected to ${this.mcpClients.size} server(s)`);

} catch (error) {

console.log('⚠️ No MCP configuration found, continuing without tools');

}

}

async connectMcpServer(serverName, config) {

try {

// Create a transport layer for communication

const transport = new StdioClientTransport({

command: config.command,

args: config.args,

env: { ...process.env, ...config.env }

});

// Create an MCP client

const client = new Client({

name: `claude-chatbot-${serverName}`,

version: '1.0.0'

}, {

capabilities: { tools: {} }

});

// Connect and discover available tools

await client.connect(transport);

const toolsResponse = await client.listTools();

this.mcpClients.set(serverName, client);

// Register each tool for later use

for (const tool of toolsResponse.tools) {

this.availableTools.set(tool.name, {

serverName,

tool,

client

});

}

} catch (error) {

console.log(`⚠️ Failed to connect to ${serverName}: ${error.message}`);

}

}

We will read in a configuration file, mcp-config.json, which will tell us which servers to use, and how to connect to them. For each server, we create a StdioClientTransport object, which will communicate with each MCP server. We then connect to the MCP server, and call the listTools() method to get back all of the tools that are available. All of this is stored away so we can use them later.

To use the Vonage balance checking tool, we need to configure our MCP server. Edit the example and add your Vonage API Key and Secret:

{

"mcpServers": {

"vonage": {

"command": "npx",

"args": ["-y", "@vonage/vonage-mcp-server-api-bindings"],

"env": {

"VONAGE_API_KEY": "abcd",

"VONAGE_API_SECRET": "1234"

}

}

}

}Our configuration file will tell the MCP transport to run a specific package, @vonage/vonage-mcp-server-api-bindings, on the command line. We will also specify two environment variables, VONAGE_API_KEY and VONAGE_API_SECRET, which will be sent along to our MCP server. Checking our account balance only requires an API Key and Secret, but for other APIs you may need to pass an Application ID and a private key.

Now comes the exciting part - making Claude aware of our tools and letting it use them:

async getClaudeResponse() {

// Prepare the list of available tools for Claude

const tools = this.availableTools.size > 0 ?

Array.from(this.availableTools.values()).map(toolInfo => ({

name: toolInfo.tool.name,

description: toolInfo.tool.description,

input_schema: toolInfo.tool.inputSchema

})) : undefined;

const messageParams = {

model: this.model,

max_tokens: 1000,

messages: this.conversationHistory

};

// If we have tools, tell Claude about them

if (tools && tools.length > 0) {

messageParams.tools = tools;

}

const message = await this.anthropic.messages.create(messageParams);

// Check if Claude wants to use any tools

if (message.content.some(content => content.type === 'tool_use')) {

return await this.handleToolUse(message);

}

return message.content[0].text;

}

getClaudeResponse() will send the list of MCP servers, their configuration, and the conversation history to Claude. MCP Servers are not directly invoked by our code, but rather are given to the backend LLM to decide if they need to call the MCP servers. If Claude decides that we need to use a tool, it will return a message of the tool_use type. If we get such a message, we will hand it off to the handleToolUse() method to handle calling the tool.

If Claude decides no tool is relevant to the message, we just return the message unchanged.

When Claude decides to use a tool, here's what happens:

async handleToolUse(message) {

let responseText = '';

const toolResults = [];

for (const content of message.content) {

if (content.type === 'text') {

responseText += content.text;

} else if (content.type === 'tool_use') {

console.log(`🛠️ Using tool: ${content.name}`);

try {

const toolInfo = this.availableTools.get(content.name);

// Execute the tool through the MCP client

const result = await toolInfo.client.callTool({

name: content.name,

arguments: content.input

});

toolResults.push({

tool_use_id: content.id,

content: result.content

});

} catch (error) {

console.log(`❌ Tool ${content.name} failed: ${error.message}`);

toolResults.push({

tool_use_id: content.id,

content: `Error: ${error.message}`,

is_error: true

});

}

}

}

// Send the tool results back to Claude for final response

if (toolResults.length > 0) {

// Add Claude's tool-use message to history

this.conversationHistory.push({

role: 'assistant',

content: message.content

});

// Add tool results to history

this.conversationHistory.push({

role: 'user',

content: toolResults.map(result => ({

type: 'tool_result',

tool_use_id: result.tool_use_id,

content: result.content,

is_error: result.is_error || false

}))

});

// Get Claude's final response incorporating the tool results

const followUpMessage = await this.anthropic.messages.create({

model: this.model,

max_tokens: 1000,

messages: this.conversationHistory

});

return responseText + followUpMessage.content[0].text;

}

return responseText;

}

If we are invoking a tool, we will parse through the returned message from Claude and find the tool that it thinks we should be calling. We then iterate over the list of tools Claude believes we should call, and as we go we collect the responses from each tool. All of this is packaged up and sent back to Claude to provide a final response.

First, make a copy of the starter project and move inside:

git clone git@github.com:Vonage-Community/blog-mcp-javascript-api_tooling_chatbot.git

cd blog-mcp-javascript-api_tooling_chatbot

Change the name of mcp-config.json.example to mcp-config.json. And replace the placeholders with your Vonage credentials.

npm install

From the command line, give your project access to your Anthropic API Key:

export ANTHROPIC_API_KEY=your_anthropic_api_key_here>> Note: If you are on a free Antrhopic Tier, you might not have access to the latest models. You’ll need to update CLAUDE_MODEL in your chatbot.js accordingly.

node chatbot.js

Here's what a conversation with your new chatbot might look like:

Animated terminal demo showing a ClaudeChatbot using the Vonage MCP Server to check a user's account balance in real time.

Animated terminal demo showing a ClaudeChatbot using the Vonage MCP Server to check a user's account balance in real time.

In that short exchange, your chatbot handled the entire workflow: Claude understood your request, invoked the balance tool through MCP, and fetched real data from the Vonage API.

This approach gives you several advantages:

Your chatbot code focuses on conversation management

MCP servers handle the complexity of integrating with external APIs

Claude handles the intelligence of when and how to use tools

Want to add weather data? Just add a weather MCP server

Need database access? Add a database MCP server

The core chatbot code doesn't need to change

MCP provides a consistent interface across different services

Tools are automatically discovered and documented

Error handling is standardized

Claude automatically decides when tools are needed

It can chain multiple tools together for complex tasks

It provides natural language explanations of what it's doing

Congratulations! You've built an AI chatbot that can not only have conversations but also interact with external services through tools. This is a powerful pattern that you can extend to integrate with virtually any service that has an MCP server.

The combination of Anthropic's Claude API and the Model Context Protocol creates a flexible, extensible platform for building AI assistants that can actually do things, not just talk about them.

Key takeaways:

Claude's API provides intelligent conversation and tool usage decision-making

MCP standardizes how AI assistants connect to external services

Tool integration happens automatically once you configure the connections

This architecture scales - you can add new capabilities without changing core code

Start with this foundation and experiment with different MCP servers to see what kinds of AI assistants you can build. The possibilities are endless!

シェア:

Chris is the Staff PHP Developer Advocate and Server SDK Initiative Lead. He has been programming for more than 15 years across various languages and types of projects from client work all the way up to big-data, large scale systems. He lives in Ohio, spending his time with his family and playing video and TTRPG games.