Google I/O 2025 Announcements Recap

Historically, Google I/O has been a conference that gets developers excited with its announcements: people in parachutes to introduce Google Glass, or when they introduced Kotlin by dragging and dropping Java to a few lines of Kotlin code, or even when Duplex was showcased, highlighting the possibilities of conversational AI.

Google I/O is the annual event I always look forward to. This year, I didn’t join in Mountain View, but I did watch everything from my couch. Listening to the launches from my living room is not as exciting, but some announcements still got me hyped about the new possibilities for developers and consumers.

Here’s what stood out to me at Google I/O 2025 as a long-term part of the Google Developer Community and a Google Developer Expert.

As expected, AI was the key part of the show. Central to Google's strategy are its consumer developer products, specifically the Gemini family of models. In particular, the conference spotlighted Gemini 2.5 Pro (top-level), 2.5 Flash (fast and affordable), Nano (built-in to the browser), and Diffusion (experimental, similar to what we have for image generation but applied to text generation).

You’ll see below that most of the announcements were heavily centered on Gemini. Learn more about how Gemini gets more personal, proactive, and powerful.

AI Mode is the feature I think was missing, because for a long time now, the term “Google it” has even become a common expression when someone wants to research something online. However, especially among younger generations, I’ve noticed a shift towards using the term “chat it” and researching on video-based platforms, or even interacting with large language models (LLMs), to obtain more complex and detailed answers.

AI Mode, now available in the US, opens up the possibility of asking longer and more complex queries, and it has the potential to be a game-changer for how we interact with AI. This new search mode enables you to receive more detailed responses, and it can even follow up with helpful links. If before you had to research several queries, now you can add more nuances to it and get an AI-powered response.

Lately, I’ve been learning about and creating a lot of content on Network APIs. One really powerful feature is the ability to reach users and devices where they are. For instance, businesses can check a device's status and determine whether capabilities such as data or SMS are available for a particular device. This way, you can check the best available channel at that moment for your users. Google has created a similar capability, but for running AI. At Google I/O, they announced a very powerful way to check a device for its AI capacity. If a user has a device that cannot run server-side AI, they have built a way to run client-side AI! And this is available on all browsers and devices. Client-side AI allows for heightened security protections for sensitive data and privacy, while still allowing for server-side as a fallback. For example, when the device is offline or has a bad connection. Learn more about when to choose client-side AI.

You can create cool videos, having control of angles, motion, and perspective. The way it works is that you can describe something with natural language, upload your images or generate them with Imagen, and extend them with Veo 3’s native audio generation to create videos with Flow, which is part of Google AI Ultra. It uses Veo, Imagen, and Gemini. You can watch some short films here.

I took an Android course years ago, but it’s been a while since I’ve done much with this ecosystem. However, I couldn’t skip talking about Android XR Smart Glasses. You can speak to the AI assistant about everything you can see and explore; It has a microphone, a front-facing camera, and speakers. Catch up on all the Android highlights.

To create a Chrome extension, we need HTML, CSS, and JavaScript. We can build them locally and also publish them. A long time ago, I wrote a Chrome Extension tutorial to Create Your First Chrome Extension in JavaScript to Hide Your API Keys, but now it seems like it’s a nice time to create a new one because there are new built-in AI APIs that allow you to use Gemini Nano on the client without requiring a server.

When you open the DevTools in your Chrome browser, for instance, click and select “inspect”. You’ll see some tabs: elements, performance, network, sources, etc…Now you can easily chat with Gemini to help you debug, test, and navigate it!

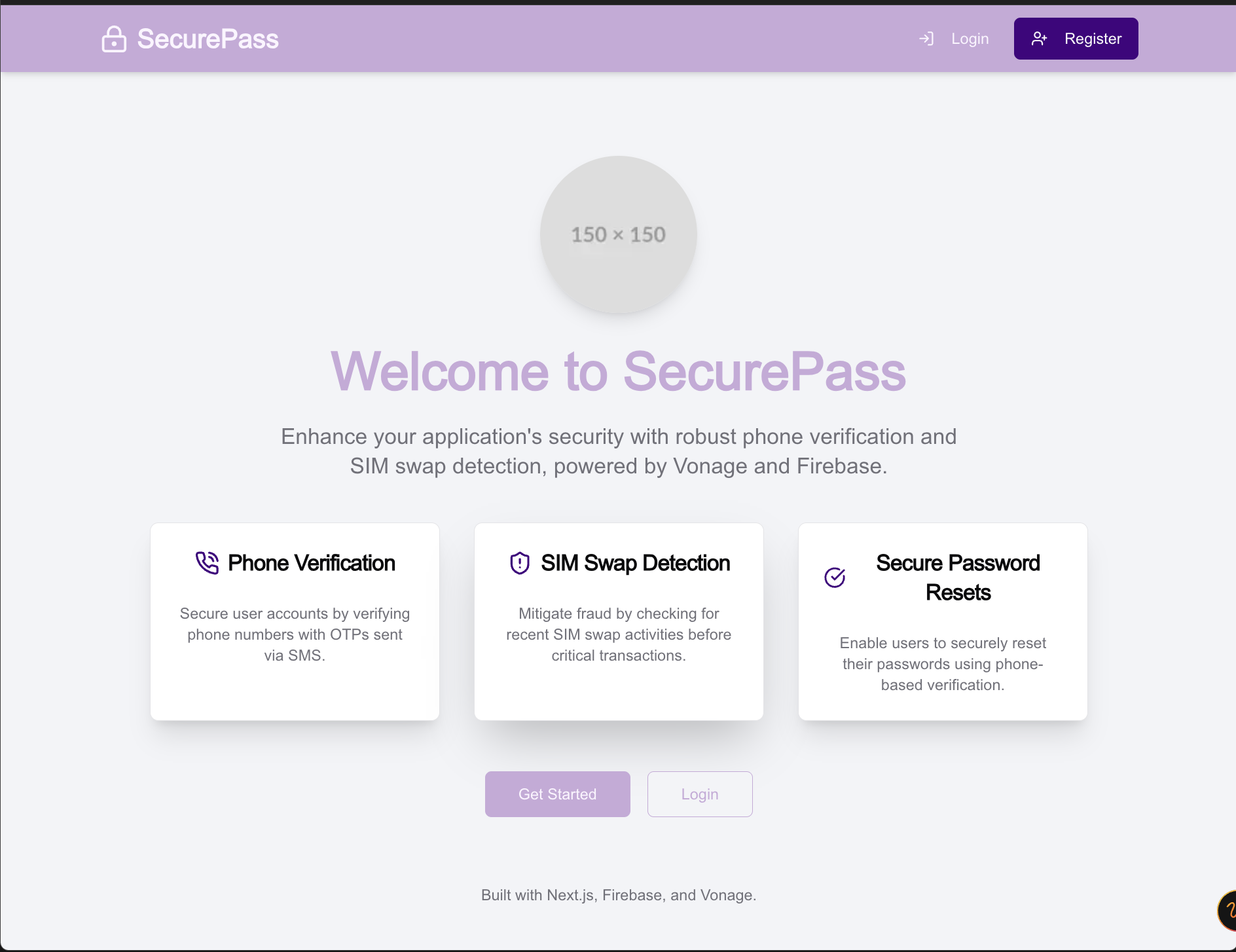

Here’s an example of it in use, I enabled the “AI Assistance” feature in the settings, opened a Sim swap project I had running on my localhost:3000 and interacted with AI on each tab to try and understand what kind of responses it can give, in the image below I ask AI is the background of my password input blue. It gives me a proper long response.

AI Assistance in Chrome DevTools

AI Assistance in Chrome DevTools

Firebase Studio has been around. It’s web-based, built in VS Code, with live preview, a full cloud Virtual Machine, and more. At Google I/O 2025, new backend integrations were announced. It’s super interesting and straightforward to use; I tested it with one of my existing GitHub projects. I’ll give you an overview below, but I plan to write more content on this tool!

You open Firebase Studio, prototype an app with AI by writing it with natural language, you can also import code or add a GitHub repo, and click to prototype with AI.

It then creates a customizable app blueprint (see below) that contains the features, style guides, and the new “Stack” that creates the backend of what you need (for instance, I’ve tried it with Cloud Firestore and Firebase Authentication). In a nutshell, it creates the visual aspect, the backend side, and even determines how data is stored.

App BlueprintYou can customize the prompt, test everything within the emulator with the support of Gemini, and when you’re ready, you can click to publish to the host and spin up the resources needed.

App BlueprintYou can customize the prompt, test everything within the emulator with the support of Gemini, and when you’re ready, you can click to publish to the host and spin up the resources needed.

In the demo I tried, I added the server.js code, and it generated a UI for me using TypeScript, Next.js, and Tailwind CSS. I then had to fine-tune the customization and environment variables, and it finally worked; I was able to test everything from my browser and publish it.

App UIGo and check the Top Firebase Studio updates from Google I/O 2025.

App UIGo and check the Top Firebase Studio updates from Google I/O 2025.

What if there were an asynchronous coding agent that you could assign to do tasks for you? Especially the tasks you hate. It can write your tests, and your documentation, and then generate a podcast summary of your codebase. You can then focus solely on the coding part that you enjoy doing.

Welcome to Jules. Jules is an asynchronous coding agent, and it's built on Gemini 2.5.

Go ahead and build with Jules, your asynchronous coding agent.

There are tons of websites and videos out there to see what was announced at Google I/O 2025, such as the Google I/O 2025 Recaps Playlist. I offered my perspective in this blog post about the announcements that seemed more interesting! I’ll keep you posted on more as I attend the Google I/O Connect in Berlin and talk to other fellow developers, take some workshops, and see how these announcements are being used in action.

Have a question or something to share? Join the conversation on the Vonage Community Slack, stay up to date with the Developer Newsletter, follow us on X (formerly Twitter), subscribe to our YouTube channel for video tutorials, and follow the Vonage Developer page on LinkedIn, a space for developers to learn and connect with the community. Stay connected, share your progress, and keep up with the latest developer news, tips, and events!