Share:

Shir Hilel is an Machine Learning Engineer at Vonage, working on the development and improvement of AI-based systems, including solutions powered by large language models. Her work focuses on building reliable and scalable data-driven capabilities.

Eliminating Hallucinations in LLM-Driven Virtual Agents

Time to read: 10 minutes

Learn how Vonage AI Studio eliminates LLM hallucinations using structured reasoning fields and schema order refinements.

Vonage AI Studio is a low-code platform for building and managing virtual agents across voice and digital channels, with AI operating behind the scenes to understand users and drive intelligent conversations. For many years, this platform has continuously evolved its NLU engine, moving from keyword-based approaches to embeddings-based models, and today to LLM-powered understanding, as new technologies were adopted over time.

Our virtual agents platform enables organizations to configure conversational agents that detect intents, extract parameters, validate user inputs, and guide conversations. The underlying LLM receives the conversation context together with the configured intents, parameters, and validation rules, and returns a structured JSON output that determines the next conversational step. As the system grew, we repeatedly encountered LLM hallucinations, outputs that were not grounded in the configuration or the user input, and these caused instability and unpredictable behavior.

This blog post explains improvements introduced to enhance LLM’s output accuracy, stability, and reliability. It outlines the challenges faced, the changes applied, and how these adjustments improved the overall quality of the system’s responses.

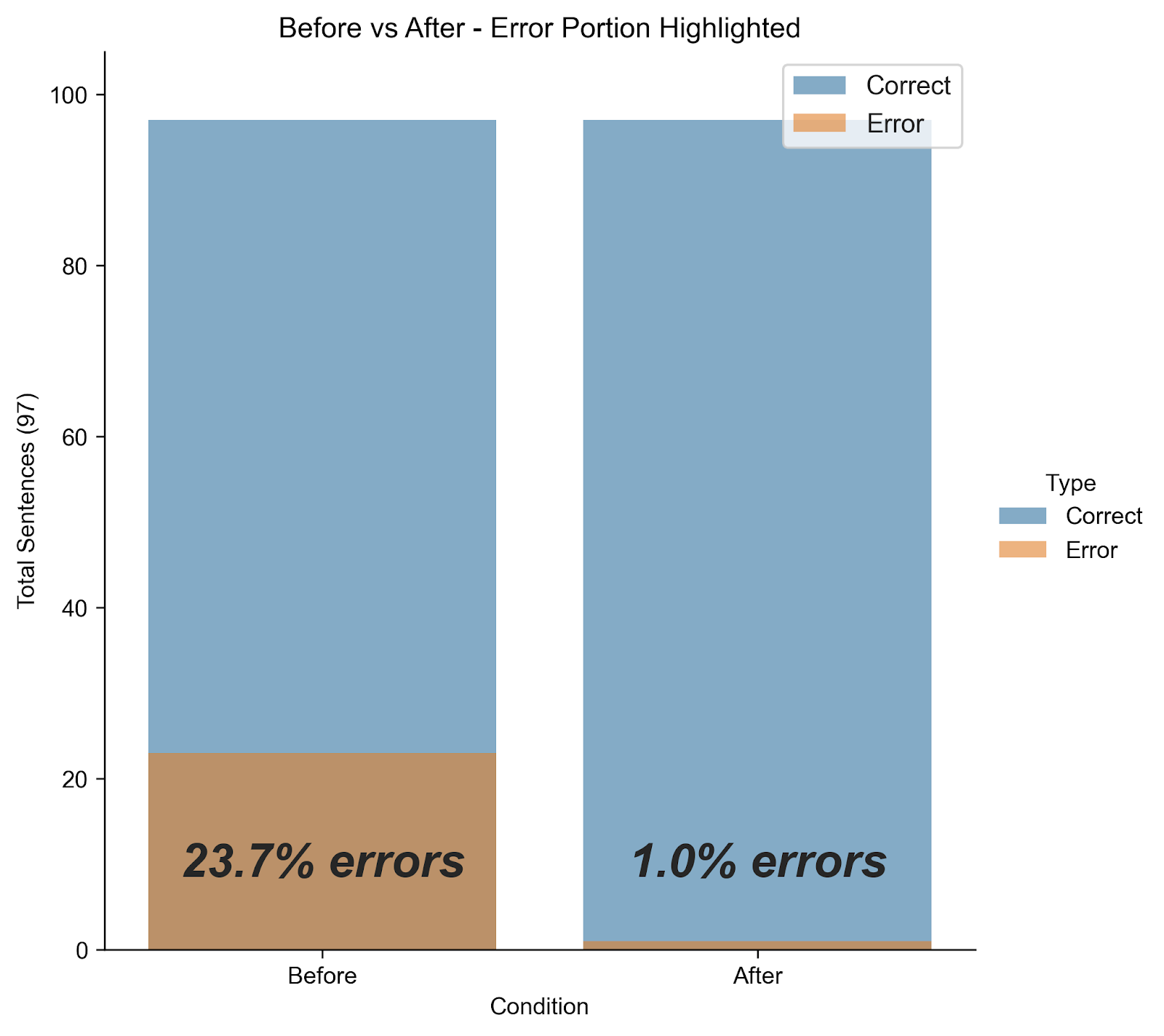

Error rate comparison before and after introducing structured reasoning: 23.7% of responses were incorrect before changes, dropping to just 1.0% after.

Error rate comparison before and after introducing structured reasoning: 23.7% of responses were incorrect before changes, dropping to just 1.0% after.

LLMs can generate fluent responses that appear correct, even when they are not grounded in the system configuration or user input. This often leads to hallucinations, situations where the agent confidently accepts invalid data or invents capabilities that do not exist. The following examples illustrate this issue.

Problem Description:

The Booking ID parameter is defined to follow a strict format:

3 uppercase letters followed by 3 digits.

In this scenario, the agent fails to enforce this rule, while the user provides only two letters of the ID instead of three

Conversation:

User: Cancel my reservation

Agent: To proceed with the cancellation, I'll need your booking ID. Could you please provide it?

User: its ab1234

Agent: I see that your booking ID is AB1234. Is that correct?

In this case, the agent incorrectly normalizes and confirms an invalid value instead of enforcing the defined format rules.

Problem Description:

The system does not include an intent for retrieving blood test results, and it also does not define any parameter named ID for such a flow, but the LLM still hallucinates that this functionality and parameter exist.

Conversation:

User: I’d like to get my blood test results

Agent: Yes, I can locate your recent blood test results. Please provide your ID so I can proceed.

The agent fabricates both a supported capability and a required parameter, creating a misleading interaction that can seriously impact user trust.

LLMs often need to generate answers using information from different parts of the prompt, such as system instructions, user inputs, and task-specific rules. When this context is scattered, inconsistent, or depends on dynamic user data (like changing parameter formats), the model is more likely to make mistakes.

To better understand why these failures occur, we can break them down into several underlying causes that repeatedly surfaced during our analysis.

LLMs may fail to connect all the required pieces correctly. Common issues include:

Selecting the wrong user intent.

Applying validation incorrectly.

Generating outputs that contradict earlier analysis, or hallucinating missing instructions or parameter formats.

The more complex and fragmented the prompt, the more likely these issues become.

LLMs generate text step by step, but their reasoning remains internal. If they make a bad assumption early, the final output will be wrong, without any visible explanation. Prompt tuning alone isn’t enough to fix this. Unless the model is forced to show its reasoning, hallucinations can’t be traced or prevented

Real-world applications require structured, predictable outputs that can be parsed or executed. At the same time, research shows that strict output constraints, like enforcing format consistency, can suppress the model’s reasoning ability and reduce task accuracy. Finding the balance between control and flexibility is critical, but still an open challenge. This trade-off is especially relevant in enterprise environments, where reliability and safe execution matter as much as model creativity.

Preventing LLMs from going off-track required more than prompt tuning. We had to change how the model approaches generating a response in the first place. Instead of letting the LLM jump straight to an answer, we now guide it to reason through the problem step by step, using structured fields, before it produces any output the system actually consumes.

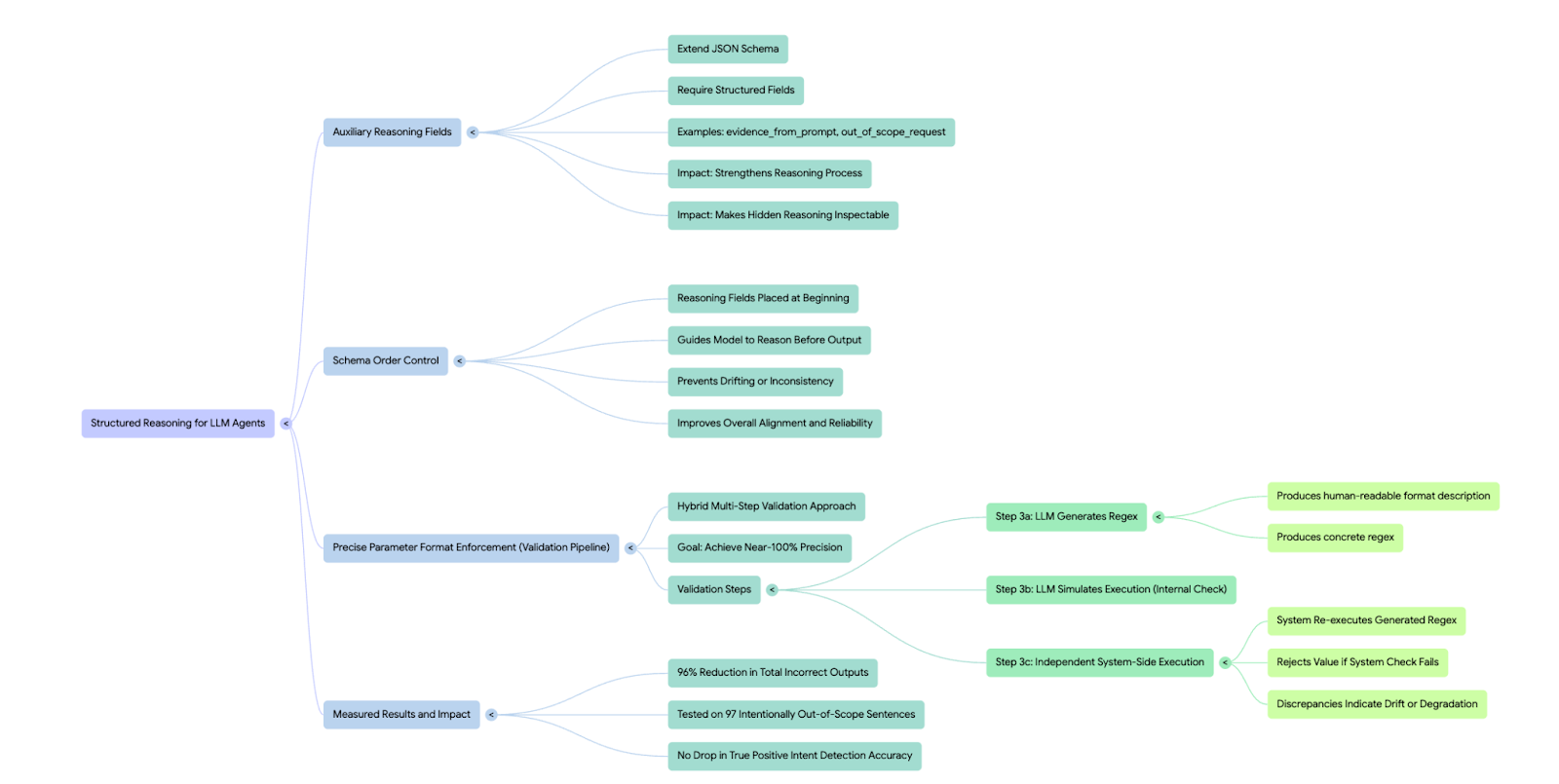

Originally, the LLM response schema included only operational fields, which were either post-processed by the system or returned directly to the user. To strengthen the model’s reasoning and focus its attention on the most critical instructions in the system prompt, we expanded the schema to include explicit, structured reasoning fields that must be generated first.

These reasoning fields are internal to the agent engine and never exposed to end users. They are not consumed directly by the system. However, by requiring their presence, we encourage the model to first analyze the context, identify key constraints, and extract relevant signals before producing the final actionable output. In practice, this turns previously hidden reasoning into structured, inspectable data and significantly improves output reliability.

Below, we show before-and-after examples that demonstrate the impact of this change.

At first glance, reordering JSON fields appears insignificant, since JSON has no semantic notion of order. But, LLMs don’t interpret JSON structurally. They generate it as plain text, one token at a time, based on everything that came before.

Because of this, field order matters. Early fields shape the model’s internal context and reasoning path, while fields generated later are more susceptible to drift or inconsistency. By placing the reasoning fields at the very beginning of the schema and carefully controlling the overall field order, we guide the model to “think before doing.”

This small structural change has a surprisingly large effect. The model is encouraged to analyze the input and constraints first, and only then produce the operational fields the system relies on. Reordering the schema and introducing explicit reasoning fields helps align the model’s generation process with the system’s actual expectations, resulting in more consistent and predictable outputs.

Conversation:

User: Cancel my reservation

Agent: To proceed with the cancellation, I'll need your booking ID. Could you please provide it?

User: its ab1234

Agent: The booking ID should contain 3 letters followed by 3 numbers. Please provide a valid booking ID.

What Changed:

The agent no longer normalizes or confirms invalid data

Format rules are strictly enforced

The user is guided to correct the input before the flow continues

Conversation:

User: I’d like to get my blood test results

Agent: Sorry, I’m not able to retrieve medical test results or request identification for this action. I can help you manage your appointments, and for any other requests, I can route you directly to the clinic.

What Changed:

The agent no longer invents unsupported capabilities

No undefined parameters (such as “ID”) are requested

The response is aligned with actual system capabilities

And if you're wondering how this looks behind the scenes:

{

"detected_intent": {

"id": null,

"name": null

},

"generated_answer":

{

"answer": "Yes, I can locate your recent blood test results. Please provide your ID so I can proceed."

}

}

{

"detected_intent": {

"id": null,

"name": null

},

"generated_answer": {

"evidence_from_prompt": "Supported intents: Book Appointment Reschedule Appointment, Cancel Appointment, Manage Appointments."

"intents_i_am_familiar_with": [

"Book Appointment",

"Reschedule Appointment",

"Cancel Appointment",

"Manage Appointments"

],

"out_of_scope_request": true,

"answer": "Sorry, I’m not able to retrieve medical test results or request identification for this action. I can help you manage your appointments, and for any other requests, I can route you directly to the clinic."

}

}By adding the new reasoning fields: evidence_from_prompt,intents_i_am_familiar_with, out_of_scope_request, the LLM is required to analyze the user input and the configuration before producing the final answer. This forces the model to think through the constraints, understand the boundaries of the scenario, and ground its answer in explicit evidence, resulting in clearer, more accurate, and more controlled responses.

{

"generated_answer": {

"answer": "I see that your booking ID is AB1234. Is that correct?",

"answer_refer_to_parameter_id": "Booking_id",

},

"message_content": "its ab1234",

"parameters": [

{

"parameter_id": "Booking_id",

"parameter_value": "AB1234",

}

]

}

{

"user_messages_analysis": {

"message_content": "its ab1234",

"current_intent": "Cancel_Reservation",

"parameters": [

{

"parameter_id": "Booking_id",

"parameter_value_format": "3 letters followed by 3 numbers (e.g., ABC123)",

"parameter_value_format_regex": "^[A-Z]{3}[0-9]{3}$",

"parameter_extracted_part": "ab1234",

“simulate_regex_execution”:false

}

]

},

"generated_answer": {

"evidence_from_prompt": "Booking_id format: 3 letters followed by 3 numbers (e.g., ABC123).",

"answer": "The booking ID should contain 3 letters followed by 3 numbers. Please provide a valid booking ID.",

"answer_refer_to_parameter_id": "Booking_id",

}

}Together, these fields require the model to validate the extracted parameter, check it against the expected format, and explicitly state whether the value is acceptable before producing the final answer. This ensures that the response is fully grounded in the model’s reasoning steps and prevents cases where the LLM confidently confirms an invalid parameter or moves the conversation forward based on incorrect assumptions.

We were satisfied with the results of these actions, but we remained concerned about potential drifts in parameter format validation. To address this, we introduced a measurable validation layer that monitors extraction rates and catches cases where the LLM still misinterprets natural-language format rules.

Adding auxiliary reasoning parameters and reordering the schema delivered meaningful improvements. Still, we were aiming for two additional goals:

achieving near-100% precision in parameter format enforcement, and

gaining strong production monitoring to detect when further tuning might be needed.

To support these goals, we utilized a multi-step validation pipeline.

As explained in the previous section, part of the auxiliary reasoning fields requires the LLM to produce:

parameter_value_format: A human-readable descriptionparameter_value_format_regex: A regex pattern generated from the free-text descriptionsimulate_regex_execution: The LLM’s assessment of whether the extracted value satisfies the regex pattern.

This forces the LLM to translate natural language into a machine-checkable format.

As part of its reasoning, the model answers "simulate_regex_execution": true | false, indicating whether it believes the regex works. This has already increased the accuracy significantly.

To guarantee precision, after receiving the LLM response, another verification layer was added: the system independently re-executes the generated regex from the parameter_value_format_regex field (with guardrails to prevent unsafe patterns). If the regex does not match, even if the LLM said it should, the agent rejects the value and guides the user to correct it.

This hybrid approach, of combining LLM reasoning with programmatic validation, produced near-perfect precision in parameter format enforcement and resolved the last inconsistencies we observed.

This validation provides us with a measurable metric for tracking the LLM’s performance over time. Whenever the LLM predicts that the regex should match (simulate_regex_execution = true) but the system-side regex execution fails, we immediately detect the discrepancy. We expect these cases to remain extremely rare, and if an upward trend appears, it serves as an early indicator of a drift or degradation in the LLM’s behavior.

To evaluate the effect of the new reasoning schema, we created a virtual agent for a medical clinic, and generated 97 intentionally out-of-scope sentences. For all of them, the correct behavior was:

intent = null, and

The generated answer should politely reject the request

Minimize cases where simulate_regex_execution = true but the system-side regex execution fails.

We ran a comparison to evaluate the impact of introducing reasoning fields:

We also validated cases where the virtual agent should detect an intent. True Positive (TP) detection remained unchanged: no drop, no degradation. The new reasoning layer reduces hallucinations without weakening correct intent detection.

Metric | Before | After | Improvement |

False Positive intents | 14 | 1 | 93% reduction |

Wrong answers | 9 | 0 | Eliminated |

Total incorrect outputs | 23 | 1 | 96% reduction |

True Positive accuracy | No impact |

There were no instances in which the LLM predicted simulate_regex_execution = true while the system-side regex execution failed. In other words, all improvements were achieved by the LLM itself.

Similar patterns were observed across other tasks that the LLM is required to generate.

Introducing explicit reasoning fields, restructuring the response schema, and adding system-side regex execution had a significant positive effect on overall LLM behavior. These changes substantially reduced hallucinations and out-of-scope responses, while preserving the model’s ability to correctly identify valid inputs. As a result, the LLM’s outputs became more consistent, predictable, and better aligned with the intended configuration and logic.

As this work evolves, we continue investigating ways to make our system’s reasoning and decisions more explainable, both to support real-world use cases and to uphold strong AI governance.

A high-level overview of the reasoning structure and validation pipeline added to improve LLM response reliability and reduce hallucinations.

A high-level overview of the reasoning structure and validation pipeline added to improve LLM response reliability and reduce hallucinations.

Have a question or something to share? Join the conversation on the Vonage Community Slack, stay up to date with the Developer Newsletter, follow us on X (formerly Twitter), subscribe to our YouTube channel for video tutorials, and follow the Vonage Developer page on LinkedIn, a space for developers to learn and connect with the community. Stay connected, share your progress, and keep up with the latest developer news, tips, and events!